2017-11-18

2017-11-18

I have been working on finishing up the software related to the board. The only feature that I had left on my wish-list was implementing the calibration stuff. The goal was to have the calibration offset be stored in EEPROM and for it to be adjustable via the text/cli interface and via the binary protocol. The process was going to touch the graphical interface piece, the middle-ware that sits between the interface and the board, and the board it self. Basically all the software I wrote for this project would have to be modified.

The text interface portion went as well as could be expected. The binary protocol interface portion, on the other hand, went less than smooth. Basically everything broke. I would hit the “save calibration values” button and everything crashed. The middle were started vomiting error messages, the board started rebooting, I started swearing.

The underlying cause seemed to be a perfect storm of broken silicon, shoddy code, and goofy operating systems.

Apparently up until I added binary protocol support for the calibration stuff, everything was basically a thick shit-suspension. The calibration stuff was that one shit-crystal that made the solution precipitate out. In no particular order here are the causes of the failures.

- Slow and bloated firmware code did not have the oomph to keep up with the little bit of extra data thrown at it by the calibration stuffs. My excuse is that I was relying on the hardware flow control mechanism in order to keep the incoming data at bay. This did not work as I expected. More on this below.

- The middle-ware communications code was working purely by luck. As soon as just a little bit of extra load was put on it, it fell apart.

- The PIC24 UART FIFO is broken. That’s well documented and I’ve mentioned this before. Basically the real world implication is that the communications chip has to respond to each and every incoming byte before the next byte is transmitted. This is theoretically a non-optimal situation, but not a show stopping one. Essentially the hardware flow control should kick in and signal to the sender that once the one byte is received to stop sending data. This works great in theory, that is until the sender decides to disregard the flow control and just keeps sending the data. This leads us to…

- The complete disregard for flow control by the sender. In my case that’s a Linux machine with standard serial hardware. I don’t know if Linux is to blame or if the serial port hardware is to blame, but the end result is complete and wanton disregard for hardware flow control.

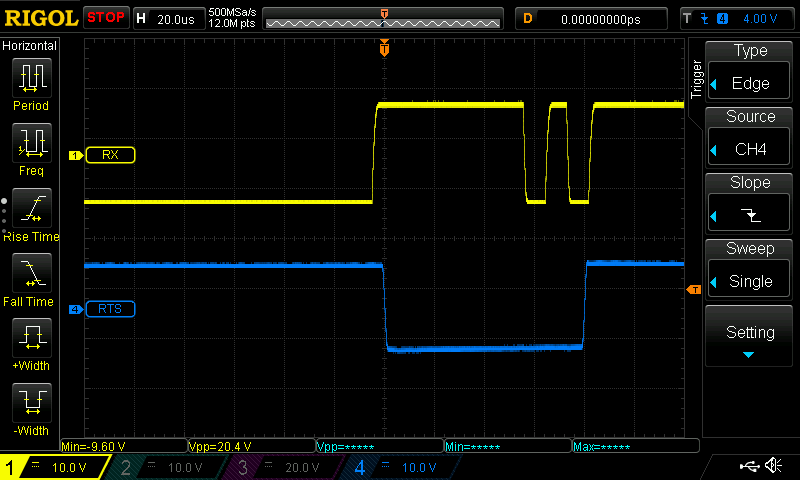

Here’s the scope screenshot to illustrate the situation:

The blue trace is the RTS line. When it goes low (the dip in the middle) all data (the yellow trace) is supposed to stop, but as we can see it does not. In hopes of not putting too fine of a point on this:

So what’s the fix? More sick Russian hardbass tracks turned up very loud? Well sure, that is a fix, but not the fix.

In the end I had to:

- Reduce the interface speed. Went from 115200 bits per second to 19200 bits per second. This is a bit of a lazy fix, but nothing short of the complete overhaul of the communications protocol would be a real fix. We’re talking congestion control, data integrity, etc. I need to read a temperature probe and turn on a relay – that’s it.

- Fix the protocol interpreter in the middle-ware layer. The good news is that it’s a whole lot more robust now.

- Cleanup the firmware, specifically the serial communications stuff. Finally had a good reason to break out the assembler! I also added some statistics counters to track protocol/communications failures. Hopefully that will help in future troubleshooting efforts.

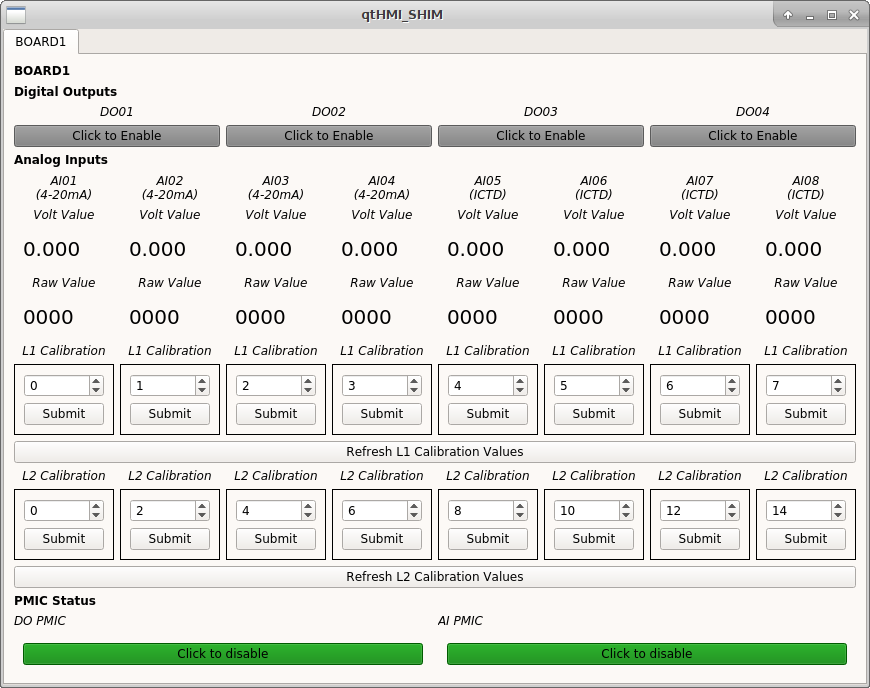

Gratuitous screen shot of the graphical interface with the calibration additions: